My posts seem to be getting a little further apart each week… This week, we’ll continue our dashboard series by adding in some pretty graphs for FreeNAS. Before we dive in, as always, we’ll look at the series so far:

- An Introduction

- Organizr

- Organizr Continued

- InfluxDB

- Telegraf Introduction

- Grafana Introduction

- pfSense

- FreeNAS

FreeNAS and InfluxDB

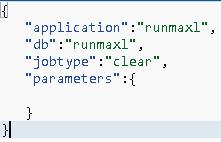

FreeNAS, as many of you know, is a very popular storage operating system. It provides ZFS and a lot more. It’s one of the most popular storage operating systems in the homelab community. If you were so inclined, you could install Telegraf on FreeNAS. There is a version available for FreeBSD and I’ve found a variety of sample configuration files and steps. But…I could never really get them working properly. Luckily, we don’t actually need to install anything in FreeNAS to get things working. Why? Because FreeNAS already has something built in: CollectD. CollectedD will send metrics directly to Graphite for analysis. But wait…we haven’t touched Graphite at all in this series, have we? No…but thanks to InfluxDB’s protocol support for Graphite.

Graphite and InfluxDB

To enable support for Graphite, we have to modify the InfluxDB configuration file. But, before we get to that, we need to go ahead and create our new InfluxDB and provision a user. If you take a look back at part 4 of this series, we cover this in more depth, so we’ll be quick about it now. We’ll start by logging into InfluxDB via SSH:

influx -username influxadmin -password YourPassword

Now we will create the new database for our Graphite statistics and grant access to that database for our influx user:

CREATE DATABASE "GraphiteStats"

GRANT ALL ON "GraphiteStats" TO "influxuser"

And now we can modify our InfluxDB configuration:

sudo nano /etc/influxdb/influxdb.conf

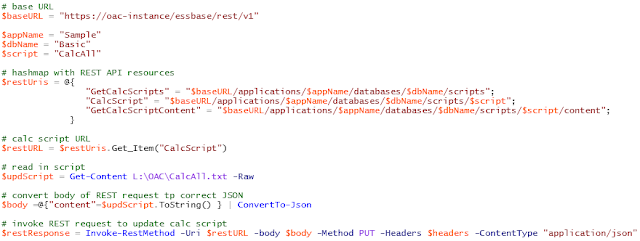

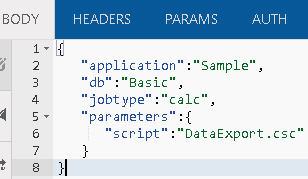

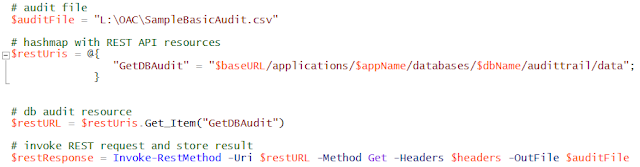

Our modifications should look like this:

![]()

And here’s the code for those who like to copy and paste:

[[graphite]]

# Determines whether the graphite endpoint is enabled.

enabled = true

database = "GraphiteStats"

# retention-policy = ""

bind-address = ":2003"

protocol = "tcp"

# consistency-level = "one"

Next we need to restart InfluxDB:

sudo systemctl restart influxdb

InfluxDB should be ready to receive data now.

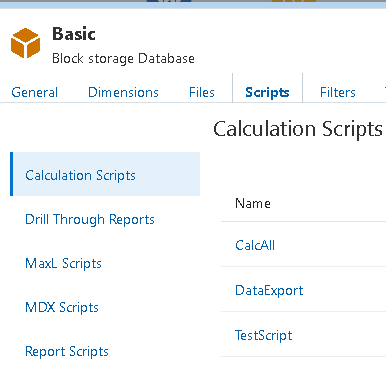

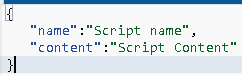

Enabling FreeNAS Remote Monitoring

Log into your FreeNAS via the web and click on the Advanced tab:

![]()

Now we simply check the box that reports CPU utilization as a percent and enter either the FQDN or IP address of our InfluxDB server and click Save:

![]()

Once the save has completed, FreeNAS should start logging to your InfluxDB database. Now we can start visualizing things with Grafana!

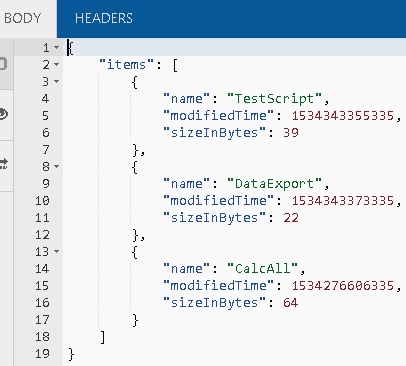

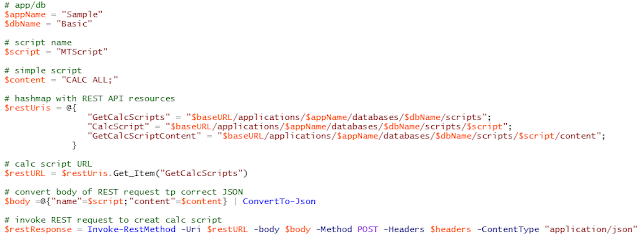

FreeNAS and Grafana

Adding the Data Source

Before we can start to look at all of our statistics, we need to set up our new data source in Grafana. In Grafana, hover over the settings icon on the left menu and click on data sources:

![]()

Next click the Add Data Source button and enter the name, database type, URL, database name, username, and password and click Save & Test:

![]()

Assuming everything went well, you should see this:

![]()

Finally…we can start putting together some graphs.

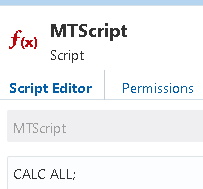

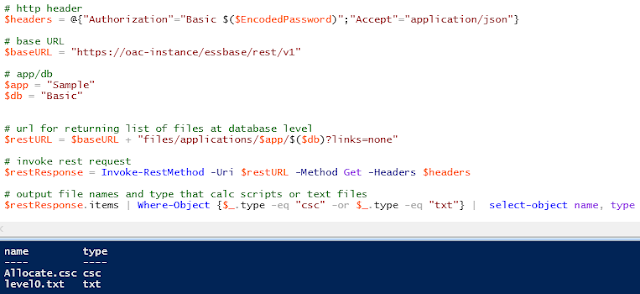

CPU Usage

We’ll start with something basic, like CPU usage. Because we checked the percentage box while configuring FreeNAS, this should be pretty straight forward. We’ll create a new dashboard and graph and start off by selecting our new data source and then clicking Select Measurement:

![]()

The good news is that we are starting with our aggregate CPU usage. The bad news is that this list is HUGE. So huge in fact that it doesn’t even fit in the box. This means as we look for things beyond our initial CPU piece, we have to search to find them. Fun… But let’s get start by adding all five of our CPU average metrics to our graph:

![]()

We also need to adjust our Axis settings to match up with our data:

![]()

Now we just need to set up our legend. This is optional, but I really like the table look:

![]()

Finally, we’ll make sure that we have a nice name for our graph:

![]()

This should leave us with a nice looking CPU graph like this:

![]()

Memory Usage

Next up, we have memory usage. This time we have to search for our metric, because as I mentioned, the list is too long to fit:

![]()

We’ll add all of the memory metrics until it looks something like this:

![]()

As with our CPU Usage, we’ll adjust our Axis settings. This time we need to change the Unit to bytes from the IEC menu and enter a range. Our range will not be a simple 0 to 100 this time. This time we set the range from 0 to the amount of ram in your system in bytes. So…if you have 256GB of RAM, its 256*1024*1024*1024 (274877906944):

![]()

And our legend:

![]()

Finally a name:

![]()

And here’s what we get at the end:

![]()

Network Utilization

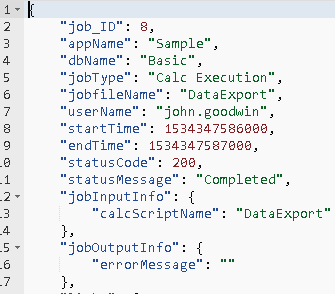

Now that we have covered CPU and Memory, we can move on to network! Network is slightly more complex, so we get to use the math function! Let’s start with our new graph and search for out network interface. In my case this is ix1, my main 10Gb interface:

![]()

Once we add that, we’ll notice that the numbers aren’t quite right. This is because FreeNAS is reporting the number is octets. Now, technically an octet should be 8 bits, which is normally a byte. But, in this instance, it is reporting it as a single bit of the octet. So, we need to multiply the number by 8 to arrive at an accurate number. We use the math function with *8 as our value. We can also add our rx value while we are at it:

![]()

Now are math should look good and the numbers should match the FreeNAS networking reports. We need to change our Axis settings to bytes per second:

![]()

And we need our table (again optional if you aren’t interested):

![]()

And finally a nice name for our network graph:

![]()

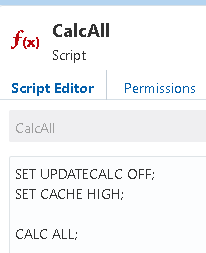

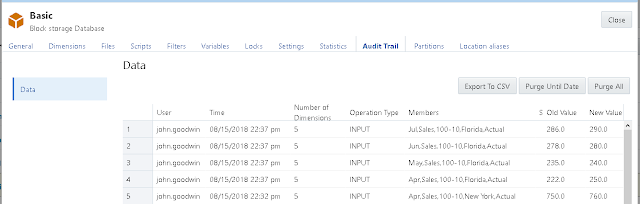

Disk Usage

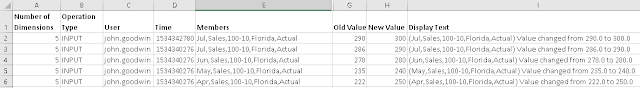

Disk usage is a bit tricky in FreeNAS. Why? A few reasons actually. One issue is the way that FreeNAS reports usage. For instance, if I have a volume that has a data set, and that data set has multiple shares, free disk space is reported the same for each share. Or, even worse, if I have a volume with multiple data sets and volumes, the free space may be reporting correctly for some, but not for others. Here’s my storage configuration for one of my volumes:

![]()

Let’s start by looking at each of these in Grafana so that we can see what the numbers tell us. For ISO, we see the following options:

![]()

So far, this looks great, my ISO dataset has free, reserved, and used metrics. Let’s look at the numbers and compare them to the above. We’ll start by looking at df_complex-free using the bytes (from the IEC menu) for our units:

![]()

Perfect! This matches our available number from FreeNAS. Now let’s check out df_complex-used:

![]()

Again perfect! This matches our used numbers exactly. So far, we are in good shape. This is true for ISO, TestCIFSShare, and TestNFS which are all datasets. The problem is that TestiSCSI and WindowsiSCSI don’t show up at all. These are all zVols. So apparently, zVols are not reported by FreeNAS for remote monitoring from what I can tell. I’m hoping I’m just doing something wrong, but I’ve looked everywhere and I can’t find any stats for a zVol.

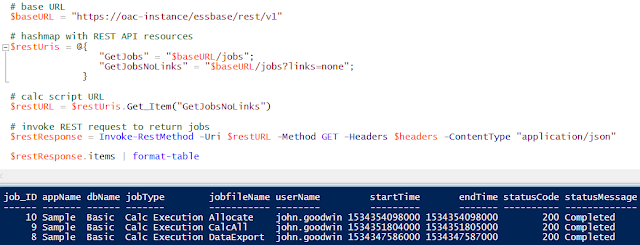

Let’s assume for a moment that we just wanted to see the aggregate of all of our datasets on a given volume. Well..that doesn’t work either. Why? Two reasons. First, in Grafana (and InfluxDB), I can’t add metrics together. That’s a bit of a pain, but surely there’s an aggregate value. So I looked at the value for df_complex-used for my z8x2TB dataset, and I get this:

![]()

Clearly 26.4 MB does not equal 470.6GB. So now what? Great question…if anyone has any ideas, let me know, as I’d happily update this post with better information and give credit to anyone that can provide it! In the meantime, we’ll use a different share that only has a single dataset, so that we can avoid this annoying math and reporting issues. My Veeam backup share is a volume with a single dataset. Let’s start by creating a singlestat and pulling in this metric:

![]()

This should give us the amount of free storage available in bytes. This is likely a giant number. Copy and paste that number somewhere (I chose Excel). My number is 4651271147041. Now we can switch to our used number:

![]()

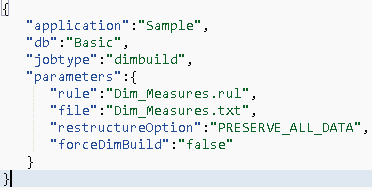

For me, this is an even bigger number: 11818579150671, which I will also copy and paste into Excel. Now I will do simple match to add the two together which gives a total of 16469850297712. So why did we go through that exercise in basic math? Because Grafana and InfluxDB won’t do it for us…that’s why. Now we can turn our singlestat into a gauge. We’ll start with our used storage number from above. Now we need to change our options:

![]()

We start by checking the Show Gauge button and leave the min set to 0 and change our max to the value we calculated as our total space, which in my case is 16469850297712. We can also set thresholds. I set my thresholds to 80% and 90%. To do this, I took my 16469850297712 and multiplied by .8 and .9. I put these two numbers together, separated by a comma and put it in for thresholds: 13175880238169.60,14822865267940.80. Finally I change the unit to bytes from the IEC menu. The final result should look like that:

![]()

Now we can see how close we are to our max along with thresholds on a nice gauge.

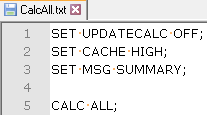

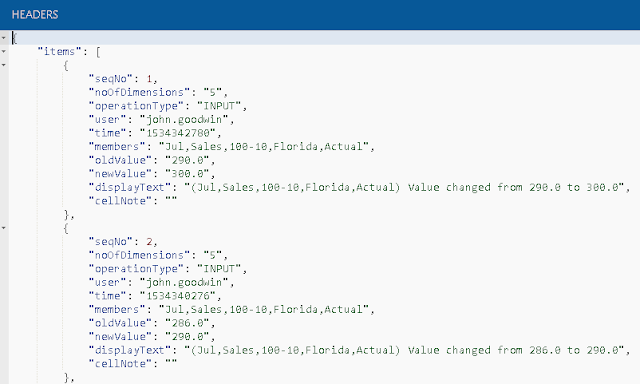

CPU Temperature

Now that we have the basics covered (CPU, RAM, Network, and Storage), we can move on to CPU temperatures. While we will cover temps later in an IPMI post, not everyone running FreeNAS will have the luxury of IPMI. So..we’ll take what FreeNAS gives us. If we search our metrics for temp, we’ll find that every thread of every core has its own metric. Now, I really don’t have a desire to see every single core, so I chose to pick the first and last core (0 and 31 for me):

![]()

The numbers will come back pretty high, as they are in kelvin and multiplied by 10. So, we’ll use our handy math function again (/10-273.15) and we should get something like this:

![]()

Next we’ll adjust our Axis to use Celsius for our unit and adjust the min and max to go from 35 to 60:

![]()

And because I like my table:

![]()

At the end, we should get something like this:

![]()

Conclusion

In the end, my dashboard looks like this:

![]()

This post took quite a bit more time than any of my previous posts in the series. I had built my FreeNAS dashboard previously, so I wasn’t expecting it to be a long, drawn out post. But, I felt as I was going through that more explanation was warranted and as such I ended up with a pretty long post. I welcome any feedback for making this post better, as I’m sure I’m not doing the best way…just my way. Until next time…

The post Build a Homelab Dashboard: Part 8, FreeNAS appeared first on EPM Marshall.