In the EPM Cloud 18.10 release there were a few additional commands added to the EPM Automate utility, these are also available through the REST API as the utility is built on top of the API.

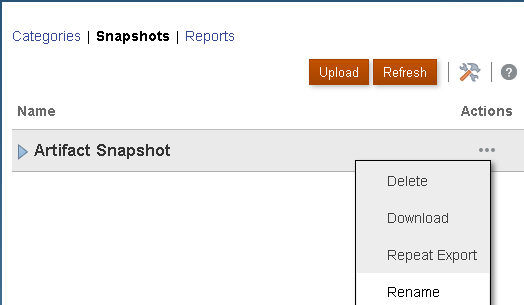

An annoyance for me with EPM Automate and the REST API has been not being able to rename a snapshot, even though it has always been possible through the web UI.

Not being able to rename out of the UI made it difficult to automate archiving the daily snapshot in the cloud instance before the next snapshot overwrote the previous one. You could download, rename and upload but this over complicates what should have been a simple rename.

With the 18.10 release it is now possible to rename a snapshot with a new EPM Automate command.

To rename a snapshot, the syntax for the utility is:

epmautomate renamesnapshot <existing snapshot name> <new snapshot name> Using EPM Automate and a script, it is simple to rename the snapshot, in the following example the daily snapshot is renamed to include the current date.

This means the snapshot is now archived and the next daily maintenance will not overwrite it.

Please note though, there is a retention period for snapshots which currently stands at 60 days and a default maximum storage size of 150GB. If this is exceeded then snapshots are removed, oldest first to bring the size back to 150GB.

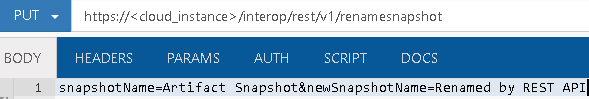

The documentation does not yet provide details on how to rename a snapshot using the REST API, but I am sure it will be updated in the near future.

Not to worry, I have worked it out and the format to rename a snapshot using the REST API is:

If the rename is successful, a status of 0 will be returned.

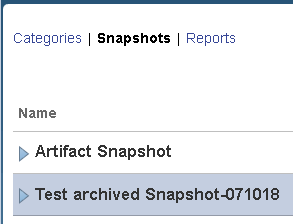

In the UI you will see the snapshot has been renamed.

If the rename was not successful, a status that is not equal to 0 will be returned and an error message will be available in the details parameter.

![]()

The functionality will only rename snapshots and does not work on other file types.

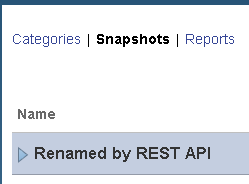

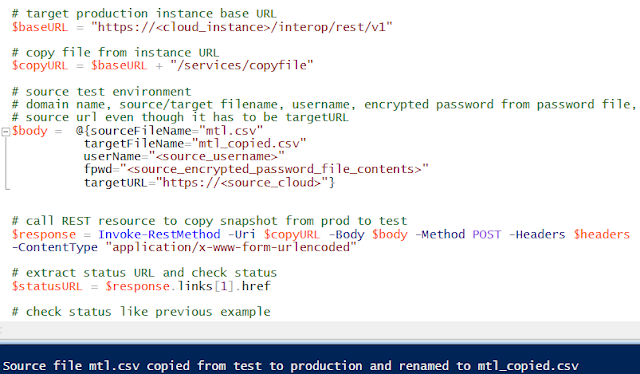

It is an easy task to script the renaming of a snapshot using the REST API. In the following example I am going to log into a test instance and rename the daily snapshot, then copy the daily snapshot from the production instance to the test instance. This means the production application is ready to be restored to the test environment if needed, also the test daily snapshot has been archived.

The above section of the script renames the test snapshot, the next section copies the production snapshot to the test instance.

When calling the REST API to copy a snapshot, a URL is returned which allows you keep checking the status of the copy until it completes.

Now in the test instance, the daily snapshot has been archived and contains a copy of the production snapshot.

It is also possible to copy files across an EPM Cloud instance using the EPM Automate command “copyfilefrominstance”. This command was introduced in the 18.07 release and the format for the command is:

epmautomate copyfilefrominstance <source_filename> <username> <password_file> <source_url> <source_domain> <target_filename>To achieve this using the REST API is very similar to my previous copy snapshot example.

Say I wanted to copy a file from the test instance to the production one and rename the file.

An example script to do this:

The file has been copied to the production instance and renamed.

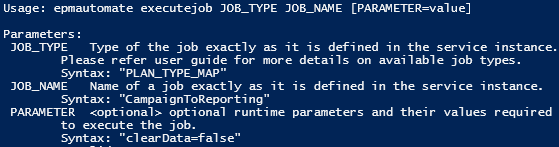

When the 18.10 monthly readiness document was first published it included details about another EPM Automate command called “executejob”

“executejob, which enables you to run any job type defined in planning, consolidation and close, or tax reporting applications”This was subsequently removed from the document, but the command does exist in the utility.

The command just looks to bypass having to use different commands to run jobs, so instead of having to use commands such as “refreshcube”,”runbusinessrule” or “runplantypemap” you can just run “executejob” with the correct job type and name.

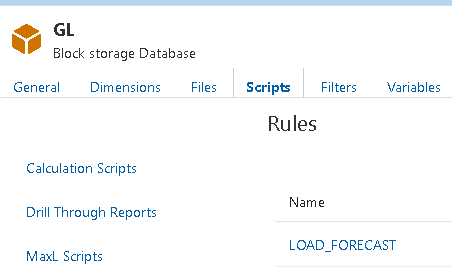

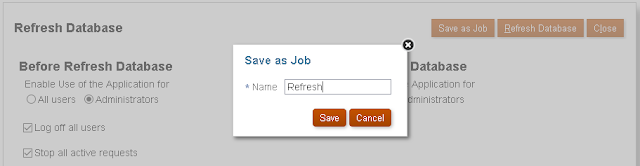

For example, if I create a new refresh database job and name it “Refresh”

The job type name for database refresh is “CUBE_REFRESH” so to run the refresh job with EPM Automate you could use the following:

The command is really replicating what has already been available in the REST API for running jobs.

The current list of job types is:

RULES

RULESET

PLAN_TYPE_MAP

IMPORT_DATA

EXPORT_DATA

EXPORT_METADATA

IMPORT_METADATA

CUBE_REFRESH

CLEAR_CUBEI am not going to go into detail about the REST API as I have already covered it

previously.

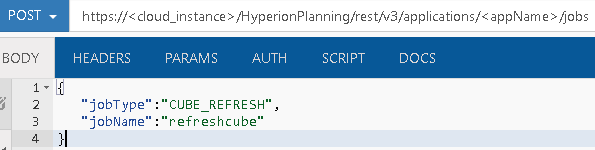

The format for the REST API is as follows:

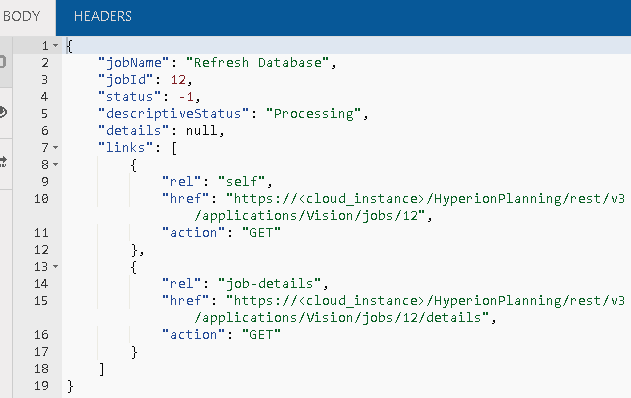

The response will include details of the job and a URL that can be used to keep checking the status.

I was really hoping that the functionality was going to allow any job that is available through the scheduler to be run, for instance “Restructure Cube” or “Administration Mode” but it looks like it is only for jobs that can be created. Hopefully that is one for the future.

In 18.05 release a new EPM Automate command appeared called “runDailyMaintenance” which allows you to run the daily maintenance process without having to wait for the maintenance window. This is useful if new patches are available and you don’t want to wait to apply them. In 18.10 release the command includes a new parameter which provides the functionality to skip the next daily maintenance process.

The format for the command is:

epmautomate rundailymaintenance skipNext=true|falseThe following example will run the maintenance process and skip the next scheduled one:

I included the -f to bypass the prompted message:

“Are you sure you want to run daily maintenance (yes/no): no?[Press Enter]”The REST API documentation does not currently have information on the command but as the EPM Automate utility is built on top of the API, the functionality is available.

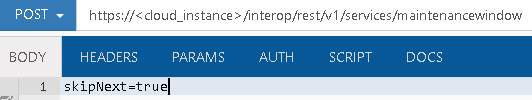

The format requires a POST method and the body of the post to include the skipNext parameter.

The response will include a URL to check the status of the maintenance process.

When the process has completed, a status of 0 will be returned.

It is worth pointing out that as part of the maintenance steps, the web application service is restarted so you will not be able to connect to the REST API to check the status while this is happening.

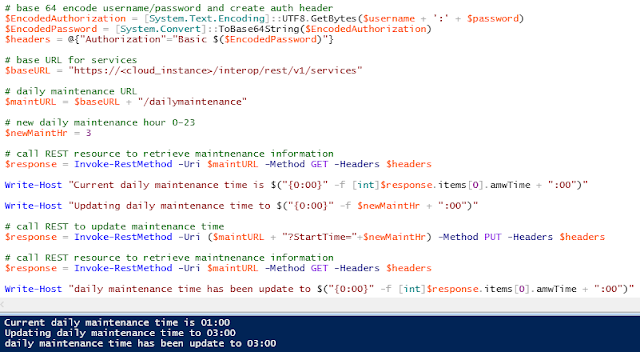

Another piece of functionality which has been available through the REST API for a long time, but not EPM Automate, is the ability to return or set the maintenance window time.

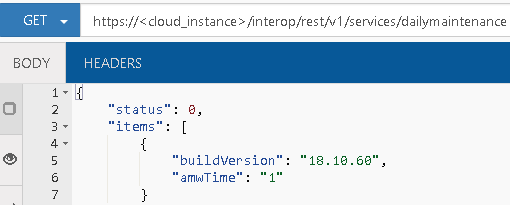

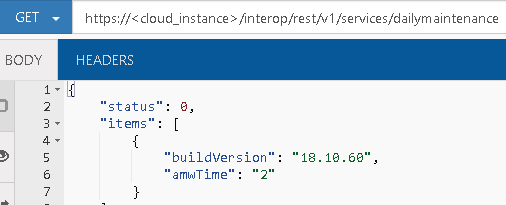

To return the maintenance time, a GET method is required with the following URL format:

The “amwTime” (Automated Maintenance Window Time) is the scheduled hour for the maintenance process, so it will be between 0 and 23.

To update the schedule time a PUT method is required and the URL requires a parameter called “StartTime”

If the update was successful a status of 0 will be returned.

You can then check the maintenance time has been updated.

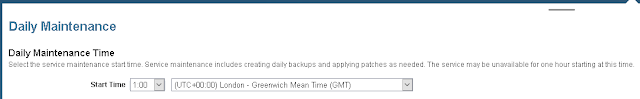

The following script checks the current maintenance time and updates it to 03:00am

I did notice a problem, even though the REST API is setting the time, it is not being reflected in the UI.

It looks like a bug to me. Anyway, until next time…